The One-Eyed Leading the Blind – Why you need more than data science and machine learning to create knowledge from data

Many job matching and recommendation engines currently on the market are based on machine learning (ML) and promoted as revolutionizing HR tech. However, despite all the work put into improving models, approaches and data over the past decade, the results are still far from what users, developers and data scientists hope for. Yet, the consensus seems to be that if we just get more even more data and even better models, and throw even more time, money and data scientists at the problem, we will solve it with machine learning. This may be true, but there is also ample reason to at least think about trying a different strategy. A lot of very clever people having been working very hard for many years to get this approach to work, and the results are – let’s face it – still really bad. In this series of posts, we are going to shed some light on some of the pitfalls of this approach that may explain the lack of significant improvement in recent years.

One of the key aspects that many experts have come to realize is that because job and skills data is so complex, ML-based systems need to be fed with some form of knowledge representation, typically a knowledge graph. The idea is that this will help the ML models better understand all the different terms used for job titles, skills, trainings and other job-related concepts, as well as the intricate relationships between them. With a better understanding, the model can then provide more accurate job or candidate recommendations. So far, so good. So the data scientists and developers are tasked with working out how to generate this knowledge graph. If you put a group of ML experts in a room and ask them to come up with a way to create a highly complex, interconnected system of machine-readable data, what do you think their approach will be? That’s right. Solve it with ML. Get as much data as you can and a model to work it all out. However, there are multiple critical issues with this approach. In this post, we’ll focus on two of these concerns that come into play right from the start: you need the right data, and you need the right experts.

The data

ML uses algorithms to find patterns in datasets. To discern patterns, you need to have enough data. And to find patterns in the data that more or less reflect reality, you need data that more or less reflects reality. In other words, ML can only solve problems if there is enough data of good quality and an appropriate level of granularity; and the harder the problem, the more data your system will need. Generating a knowledge graph for jobs and associated skills with ML techniques is a hard problem, which means you need a lot of data. Most of this data is only available in unstructured and highly heterogeneous documents and datasets like free text job descriptions, worker profiles, resumes, training curricula, and so on. These documents are full of unstandardized or specialist terms, synonyms and acronyms, descriptive language, or even graphical representations. There are ambiguous or vague terms, different notions of skills, jobs, and educations. And there is a vast amount of highly relevant implicit information like the skills and experience derived from 3 years in a certain position at a certain company. All this is supposed to be fed into an ML system which can accurately parse, structure and standardize all relevant information as well as identify all the relevant relationships to create the knowledge graph.

Parsing and standardizing this data is already an incredibly challenging task, which we’ll discuss in another post. For now, let’s suppose you know how to build this system. No matter how you design it, because it’s based on ML to solve a complex task, it’s going to need a lot of data on each concept you want to cover in your knowledge graph. Data on the concept itself and on its larger context. For instance, it will need a large amount of data to learn that data scientists, data ninjas and data engineers are closely related, but UX designers, fashion designers and architectural designers are not. Or that CPA is an abbreviation of Certified Public Accountant, which has nothing in common with a CPD tech in a hospital.

This may be feasible for many common white-collar jobs like data scientists and social media experts, because their respective job markets are large and digitalized. But how much data do you think is out there for cleaner/spotters, hippotherapists or flavorists? You can only solve a problem with ML that you have enough data for.

The experts

Let’s suppose (against all odds) that you solved the data problem. You can now choose one of three possible approaches:

- Eyes wide shut: Build the system, let it generate the knowledge graph autonomously and feed the result into your job recommendation engine without ever looking at it.

- Trust but verify: Build the system, let it generate a knowledge graph autonomously and fine tune the result with humans.

- Guide and grow: Build the system and let it generate a knowledge graph using human input along the way.

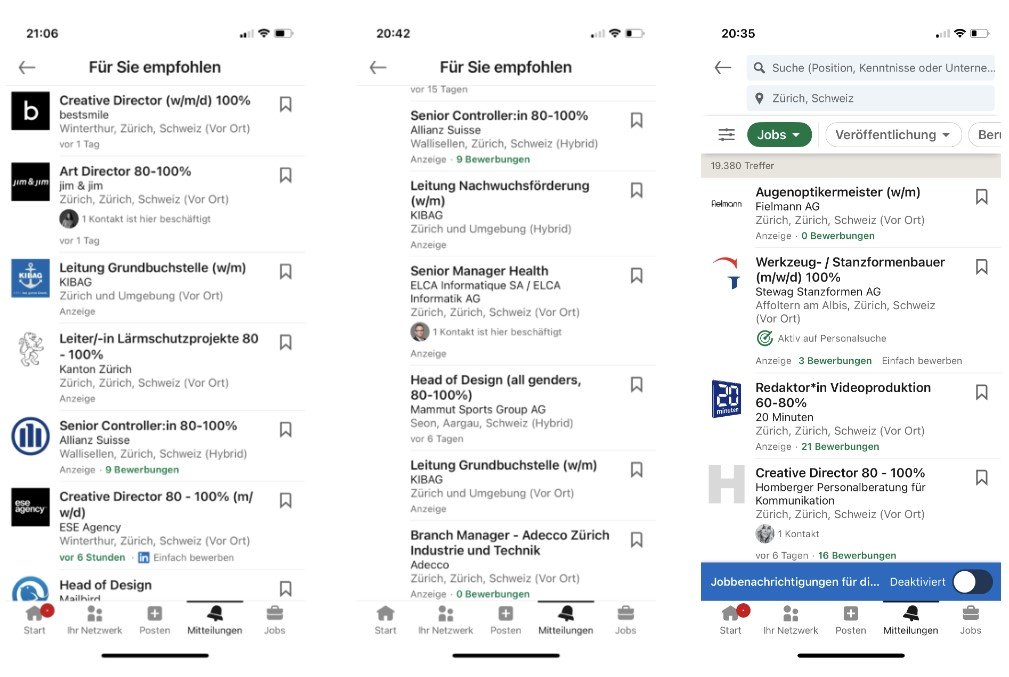

Based on what’s currently on the market, one wonders if most knowledge graphs in HR tech are built on the eyes wide shut approach. We have covered the results of several such systems in previous posts (e.g., here, here and here). You may also have come across recommendations like the ones below yourself.

If not, it may be that the humans involved in the process are all data scientists and machine learning experts, i.e., people who know all about building the system itself, instead of domain experts who know all about what the system is supposed to produce. Whether you fine tune the results at the end or give the system input or feedback along the way, at some point, you will have to deal with domain questions like the difference between a cleaner/spotter and a (yard) spotter, what exactly a community wizard is, or whether a forging operator can work as an upset operator. And then of course, all the associated skills. If you want a job matching engine to produce useful results, this is the kind of information your knowledge graph needs to encode. Otherwise you just perpetuate what’s been going on so far, namely:

You simply cannot expect data scientists to assess the quality of this kind of knowledge representation. Like IKEA said at the KGC in New York: domain knowledge takes years of experience to accumulate—it’s much easier to learn how to curate a knowledge graph. And IKEA is “just” talking about their company-specific knowledge, not domain knowledge in a vast number of different industries, different specialties, different company vocabularies, and so on. You need an entire team of domain experts from all kinds of different fields and specialties to assess and correct a knowledge graph of this magnitude and depth.

Finally, what if you want a knowledge graph that can be used for job matching in several languages? Again, an ML expert may think there’s a model or a database for that. Let’s look at databases first. Probably one of the most extensive multilingual databases for job and skills terminology is the ESCO taxonomy. However, apart from not being complete, it is riddled with mistakes. For instance, one of the skills listed for a tanning consultant is (knowledge of) tanning processes. Sound good, right? However, if you look at the definitions or the classification numbers for these two concepts, you see that a tanning consultant typically works in a tanning salon while tanning processes have to do with manufacturing leather products. There are many, many more examples like this in ESCO. Do you really want to feed this into your system? Maybe not. What about machine translation? One of the best ML-based translators around is DeepL. According to DeepL, the German translation of yard spotter is Hofbeobachter. If you have very basic knowledge of German, this may seem correct because Hof = yard and Beobachter = spotter. But Hofbeobachter is actually the German term for a royal correspondent, a journalist who specializes in reporting on royalty. A yard spotter, or a spotter, is someone who typically moves or directs, checks and maintains materials or equipment in a yard, dock or warehouse. The correct German term would be Einweiser, which translates to instructor or usher in DeepL. There is a simple explanation for these faulty translations: there is just not enough data connecting the German and English terms in this specific context. So, you need an entire team of multilingual domain experts from different fields and specialties to assess and correct these translations – or just do the translations by hand. And simply translating isn’t enough. You need to localize the content. Someone has to make sure that your knowledge graph contains the information that a carpenter in a DACH country has very different qualifications to a carpenter in the US. Or that Colombia and Peru use the same expressions for very different levels of education. Again, this is not a task for a data scientist. Hire domain experts and teach them how to curate a knowledge graph.

Of course, you can carry on pursuing a pure ML/data science strategy for your HR Tech solutions and applications if you insist. But – at the risk of sounding like a broken record – a lot of very smart people having been working on this for many years with generous budgets and no matter how hard the marketing department sings its praise, the results are still appalling. Anyone with a good sense of business should realize that it’s time to leave the party. If you’re still not convinced, keep an eye out for our next few posts in this series. We’re going to take down this mythical ML system piece by piece and model by model and show you: if you want good results anytime soon, you’ll need more than just data science and machine learning.

Quicklinks

Follow us!

Latest articles

22.06.2023, 11:31Knowledge ≠ Skills ≠ Experience – or why a consistent distinction between these terms is more important than ever.

22.06.2023, 11:31Knowledge ≠ Skills ≠ Experience – or why a consistent distinction between these terms is more important than ever. 22.06.2023, 11:31AI, automation, and the future of work – beyond the usual bubbles

22.06.2023, 11:31AI, automation, and the future of work – beyond the usual bubbles 28.04.2023, 18:17Big corporations: a quarter of a million want to leave

28.04.2023, 18:17Big corporations: a quarter of a million want to leave 28.04.2023, 18:09Old news, current problem: over-qualified graduates in the UK

28.04.2023, 18:09Old news, current problem: over-qualified graduates in the UK 28.04.2023, 16:44The World Economic Forum on the future of jobs

28.04.2023, 16:44The World Economic Forum on the future of jobs

Stay tuned!

The JANZZ.jobs newsletter will keep you regularly updated about exciting new features and news.

Save 50%

Save 50% on your next subscription! Simply enter code XXXXX in the registration process.